There are various ways to make digital content unusable, or even harmful, for AI training and AI search.

There are various ways to make digital content unusable, or even harmful, for AI training and AI search.

Data Poisoning

One way to do this is called data poisoning. In this process, the pixels of an image are invisibly modified before sharing it online. While the image remains unchanged to the human eye, AI systems interpret it incorrectly or may be unable to process it at all.

When applied systematically and on a large scale, data poisoning can affect the quality of entire generative AI models. Manipulated image data leads to inaccurate learning outcomes, resulting in inappropriate or erroneous outputs in text-to-image prompts.

For artists, this technique offers a way to continue displaying their work online while preventing it from being used for AI training. This creates a form of resistance against the automated exploitation of artists works.

Tools for data posioning

Glaze

Glaze uses machine learning algorithms to make minimal changes to artworks that are imperceptible to the human eye, but appear as entirely different works to AI models. This causes the AI to misclassify the piece. The changes made by Glaze are essentially invisible to us, meaning that screenshots of the image or other editing methods cannot reverse the process.

How?

Tutorials:

Users guide of Glaze: Link

Go to the tool: Link

Glaze as Webtool: Link

(For people who don't have a computer or not enough GPU)

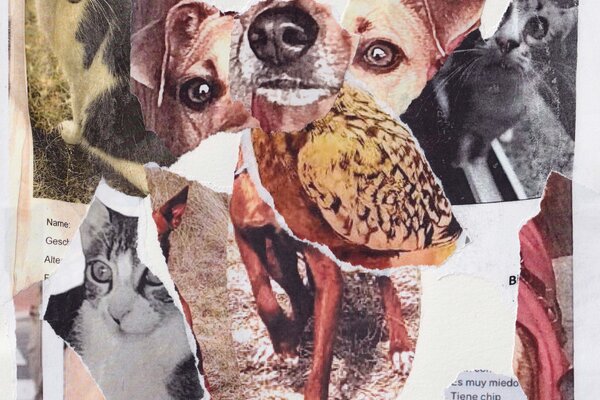

Nightshade

Nightshade modifies a work in a way that appears as subtle shadows to the human eye. But for the AI an entirely new image is created. For instance, while a human might recognise a cow, the AI might suddenly interpret the image as a leather bag. Over time, the AI thus develops the incorrect association that a 'cow' is a leather bag, leading to increasingly inaccurate results.

This approach makes licensing artworks a more realistic alternative for AI providers by causing harm through the use of unlicensed works.

Tutorial: Link

Go to the tool: Link

Dynamic watermarks

A dynamic watermark embeds an invisible or semi-visible code into digital content. Unlike regular watermarks, this code can change over time or in response to specific situations.